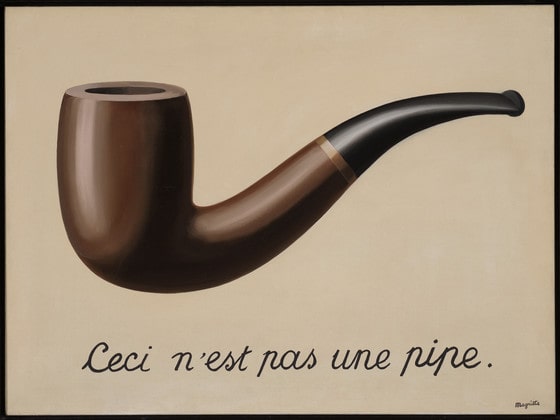

About The Artwork

Inspired by the 1929 painting, The Treachery of Images, by René Magritte, this coding final project aims to elicit the same message that René conveyed regarding the deceptiveness of representation.

With the advent of the avatar, we discover a rare opportunity for the reinvention of 'self'. No longer bound to our physical vessel, we are invited to project imaginative boundaries, or reflect and re-assimilate our perceived and actualized 'me'. This double-edged sword offers a unique choice to the participant; a fork in the road based on intention and personal engagement.

Is the avatar an act of deception, or a more honest representative reality? Like most technology, the avatar is amoral in nature until wielded from a participant's personal context and worldview. We discover each side of the coin. Is this fragmentation, deception, or liberation?